-

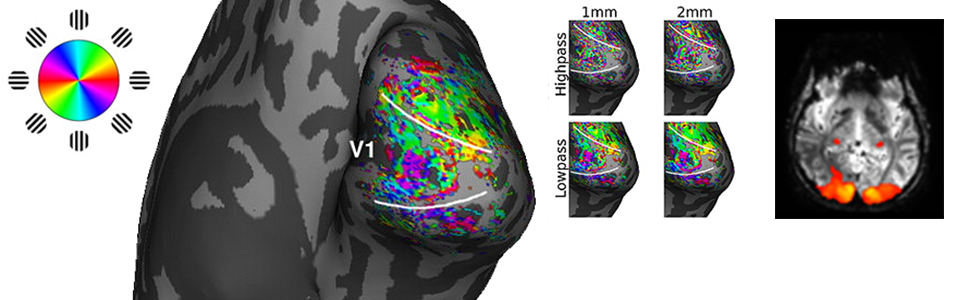

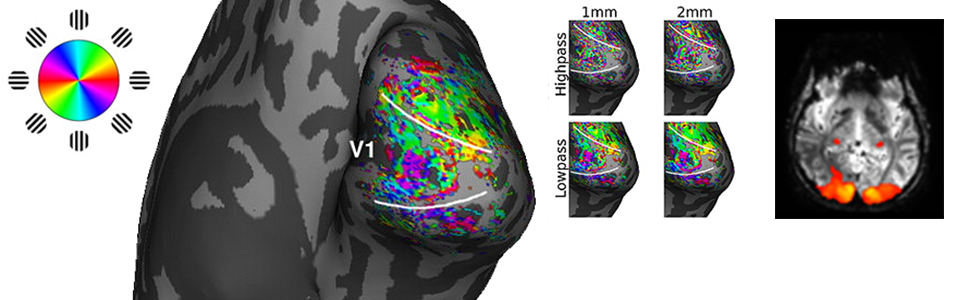

HIGH-RESOLUTION fMRI AT 7 TESLA The Tong Lab uses high-resolution fMRI at 7 Tesla to investigate the functional role of the early visual system in visual perception, attentional selection, figure-ground processing, predictive coding, and visual working memory.

HIGH-RESOLUTION fMRI AT 7 TESLA The Tong Lab uses high-resolution fMRI at 7 Tesla to investigate the functional role of the early visual system in visual perception, attentional selection, figure-ground processing, predictive coding, and visual working memory.

-

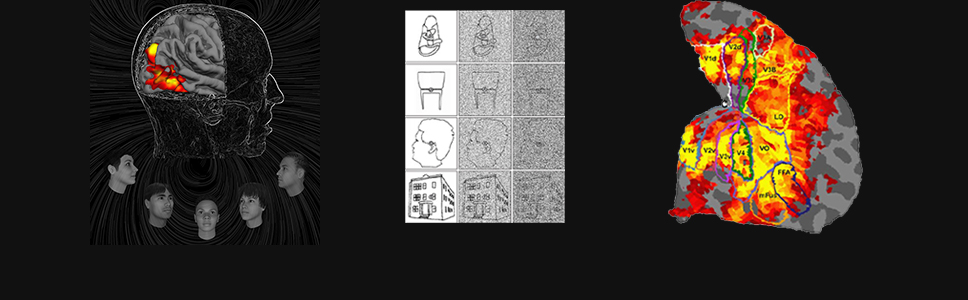

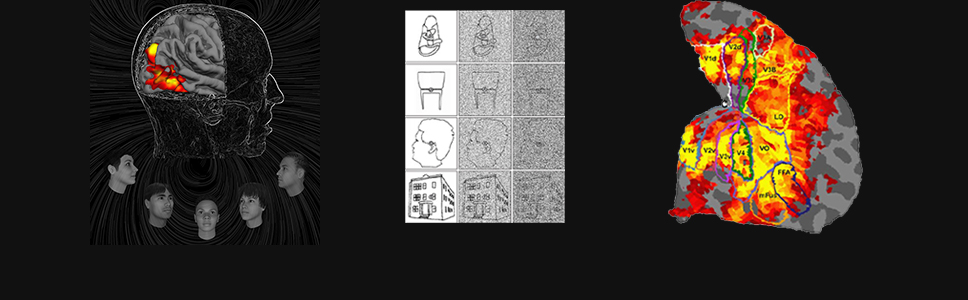

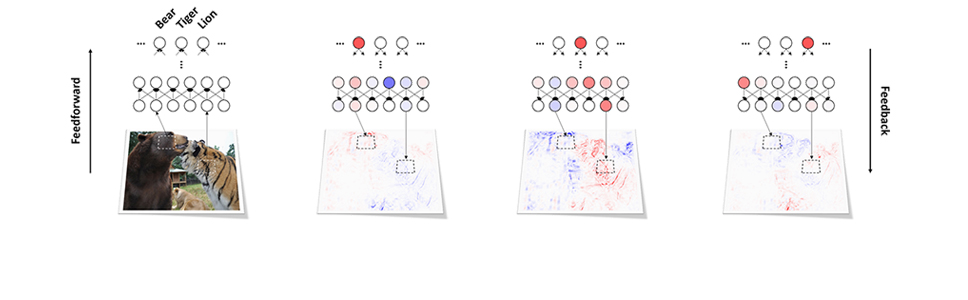

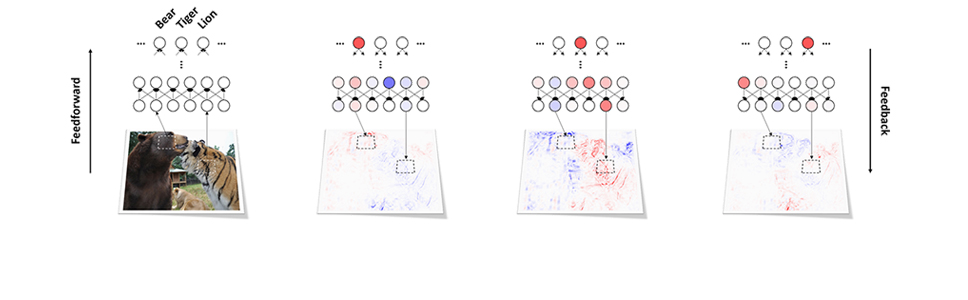

FACE AND OBJECT PROCESSING We study the neurocomputational bases of face and object processing using behavioral methods, functional neuroimaging, TMS, and the development of convolutional neural networks (CNNs) as a model for human recognition performance.

FACE AND OBJECT PROCESSING We study the neurocomputational bases of face and object processing using behavioral methods, functional neuroimaging, TMS, and the development of convolutional neural networks (CNNs) as a model for human recognition performance.

-

MECHANISMS OF VISUAL ATTENTION

Our lab investigates how bottom-up saliency and top-down attentional signals interact in the early visual system. We are also developing and testing a neurocomputational model of object-based attentional selection.

MECHANISMS OF VISUAL ATTENTION

Our lab investigates how bottom-up saliency and top-down attentional signals interact in the early visual system. We are also developing and testing a neurocomputational model of object-based attentional selection.

-

VISUAL WORKING MEMORY The Tong Lab pursues research on the behavioral and neural bases of visual working memory. Our goal is to characterize and model the neural representations that underlie visual working memory.

VISUAL WORKING MEMORY The Tong Lab pursues research on the behavioral and neural bases of visual working memory. Our goal is to characterize and model the neural representations that underlie visual working memory.

-

DEEP LEARNING NETWORKS OF VISUAL PROCESSING A growing focus of the lab is the application and development of deep learning networks as potential models of human visual performance, especially for object recognition tasks. We are currently working with a variety of networks, including AlexNet, VGG-19, GoogleNet, ResNet and Inception-v3. We have also begun constructing our own deep network architectures.

DEEP LEARNING NETWORKS OF VISUAL PROCESSING A growing focus of the lab is the application and development of deep learning networks as potential models of human visual performance, especially for object recognition tasks. We are currently working with a variety of networks, including AlexNet, VGG-19, GoogleNet, ResNet and Inception-v3. We have also begun constructing our own deep network architectures.

This jQuery slider was created with the free EasyRotator software from DWUser.com.

Use WordPress? The free EasyRotator for WordPress plugin lets you create beautiful WordPress sliders in seconds.

OK

Use WordPress? The free EasyRotator for WordPress plugin lets you create beautiful WordPress sliders in seconds.

OK